Managing employee leave and vacations is a crucial part of Human Resources operations. It directly affects team productivity, employee satisfaction, and business continuity. Without a streamlined process, managing requests, tracking balances, and ensuring fair approval can become complex and error-prone.

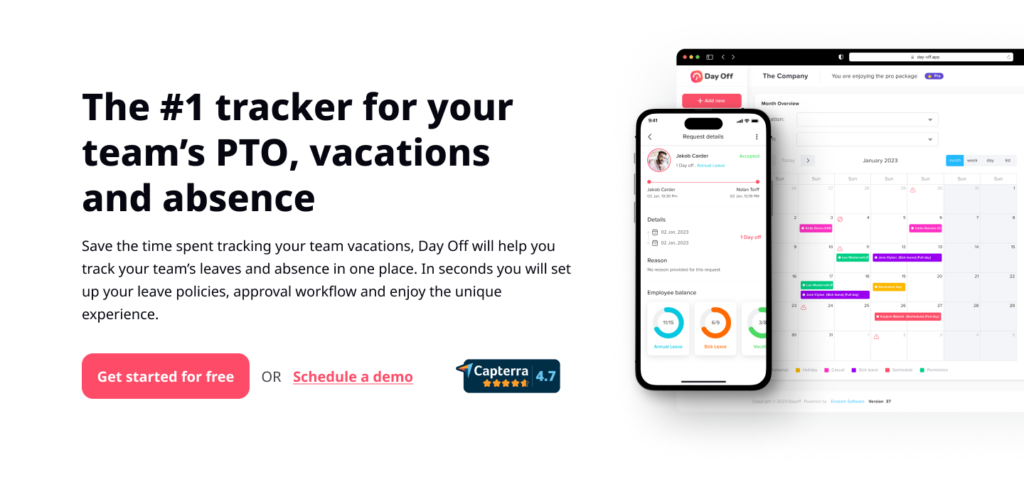

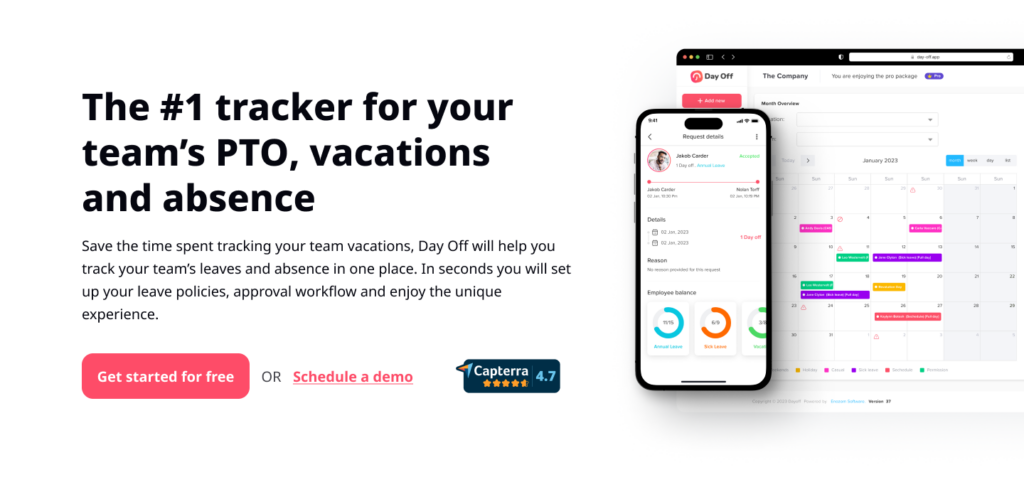

That’s where Vacation Tracker apps like Day Off come in. These tools have become indispensable for modern organizations seeking efficiency, accuracy, and transparency in leave management. They simplify how HR teams handle Paid Time Off (PTO), sick days, personal leaves, and vacations, while empowering employees to plan their time off responsibly and confidently.

This article explores the key features that make a vacation tracker app effective, user-friendly, and essential for modern HR departments, with a deep dive into how Day Off delivers excellence in every aspect.

User-Friendly Vacation Tracker Interface

A successful vacation tracker starts with usability. The interface should be intuitive, responsive, and accessible to all users, regardless of technical expertise. An overly complicated system can frustrate employees and create bottlenecks, negating the benefits of automation.

Day Off stands out with its user-friendly dashboard, which offers a clean and organized layout for both employees and managers. Users can instantly see their:

-

Current leave balances

-

Pending or approved requests

-

Upcoming vacations within their team

The app’s design ensures that employees can request time off in seconds, while managers can review requests and team availability at a glance. Accessibility is another key strength, Day Off works seamlessly across mobile devices, tablets, and desktop computers, allowing leave management anytime, anywhere.

This ease of use fosters transparency, engagement, and independence, empowering employees to manage their own leave while reducing HR’s administrative burden.

Real-Time Vacation Tracking and Automatic Updates

Manual leave tracking often leads to confusion and disputes, especially when balances aren’t updated promptly. A powerful vacation tracker eliminates this problem by updating leave balances automatically in real time.

With Day Off, every time an employee requests, cancels, or modifies a leave, the system automatically adjusts their available balance. HR and managers can view these changes instantly, ensuring complete accuracy and preventing misunderstandings.

This real-time tracking not only promotes transparency but also helps employees plan their vacations confidently, knowing exactly how many days they have left. It also saves HR teams countless hours in recalculating and reconciling balances, leading to better efficiency and fewer errors.

Seamless Request and Approval Process

A vacation tracker app should simplify the request-approval workflow, making it smooth, fast, and transparent for all parties involved.

In Day Off, employees can submit requests with just a few clicks, specifying the leave type (vacation, personal, or sick leave) and desired dates. Once submitted, managers receive an automated notification, allowing them to approve or deny the request immediately from their dashboard or mobile device.

Managers can also see team calendars before making decisions, ensuring that approvals do not cause resource gaps. This automation eliminates back-and-forth emails and delays, creating a streamlined process where approvals are both efficient and accountable.

By automating this process, organizations experience shorter approval times, improved communication, and enhanced employee satisfaction.

Calendar Integration and Team Visibility

Calendar integration is one of the most powerful features of any advanced vacation tracker. It ensures that approved leaves automatically sync with Google Calendar, Outlook Calendar, and other productivity tools, providing visibility across the organization.

Day Off takes this a step further by offering team-level calendar views, allowing managers to visualize who is on leave at any given time. This holistic view helps prevent overlapping absences, maintain adequate staffing, and plan projects effectively.

For employees, seeing their colleagues’ planned leaves helps in collaboration and workload planning. For HR, it ensures accurate recordkeeping and compliance with internal staffing policies. The result is a well-organized system that keeps everyone informed and aligned.

Customizable Policies and Configurable Settings

Every organization has unique policies governing leave accrual, eligibility, carryover limits, and holidays. A one-size-fits-all system can’t address these nuances.

Day Off offers highly customizable settings, allowing HR teams to tailor the app to match company-specific policies. Whether you have global teams with different public holidays or multiple departments with unique accrual structures, Day Off can handle it all.

Key customization options include:

-

Different types of leave (vacation, sick, parental, unpaid, etc.)

-

Variable accrual rates and carryover caps

-

Distinct working days and holidays per team or region

-

Policy enforcement for blackout dates or minimum notice periods

This flexibility ensures compliance with internal guidelines and local labor laws while keeping the user experience consistent across the organization.

Reporting and Analytics for Strategic Decision-Making

In the digital age, data is power. A great vacation tracker does more than record leave, it provides insights into trends, usage, and performance.

Day Off’s reporting and analytics tools generate detailed reports on:

-

Leave utilization rates

-

Unused or excessive PTO trends

-

Peak vacation seasons

-

Absence frequency per department or role

These insights help HR leaders make data-driven decisions about resource allocation, staffing plans, and policy updates. Exportable data also simplifies integration with payroll systems and compliance reporting, saving time during audits and financial reconciliations.

By understanding how employees use their leave, companies can identify burnout risks, improve work-life balance, and plan strategically for busy periods.

Data Security and Privacy

Because vacation tracker apps store sensitive employee information, like personal details, leave types, and medical absences, data security is paramount.

Day Off employs enterprise-grade security protocols to ensure all data remains private and protected.

This includes:

-

End-to-end encryption

-

Secure cloud storage

-

Regular backups

-

Role-based access controls

-

Compliance with major data protection laws such as GDPR and CCPA

These safeguards give both employers and employees confidence that their personal information is handled responsibly and safely.

Notifications and Smart Reminders

Automated notifications are the unsung heroes of efficient leave management. They keep everyone aligned without manual follow-up.

Day Off sends timely alerts for:

-

New leave requests and approvals

-

Upcoming vacations or back-to-office dates

-

Policy reminders and balance updates

Managers can receive instant notifications through email or Slack, while employees are alerted about approvals, rejections, or approaching leave caps. These smart reminders prevent communication gaps and ensure nothing slips through the cracks, even during busy periods.

Mobile Accessibility and On-the-Go Management

In today’s hybrid and remote work environments, mobile access isn’t optional, it’s essential.

Day Off’s mobile app extends full system functionality to smartphones, allowing users to submit, track, and approve requests from anywhere. Managers can approve leaves while traveling, and employees can plan vacations without waiting to log into a desktop system.

Push notifications keep everyone informed in real time, and the mobile interface mirrors the simplicity and clarity of the web version. This flexibility enhances responsiveness, convenience, and efficiency across the board.

Support and Help Resources

Even the most intuitive systems benefit from accessible support and educational resources.

Day Off offers a variety of help options, including:

-

In-app tutorials and walkthroughs

-

A comprehensive FAQ library

-

Live customer support and chat assistance

These resources empower HR teams and employees to troubleshoot independently and make the most of the system’s capabilities. Responsive support fosters confidence in the platform and ensures a seamless user experience from day one.

How Vacation Tracker Apps Improve Workplace Culture

Beyond streamlining HR tasks, vacation tracker apps contribute significantly to a positive workplace culture. By promoting transparency, fairness, and respect for personal time, tools like Day Off encourage employees to take their well-earned breaks without hesitation or confusion.

When employees see that their organization values rest and recovery, they feel more motivated, loyal, and productive. For HR, this translates into higher retention rates and a healthier, happier workforce.

Frequently Asked Questions (FAQ)

Why should companies switch to a digital vacation tracker?

A digital tracker eliminates manual errors, provides real-time visibility, and automates the approval workflow. It ensures accuracy in leave balances, compliance with policies, and transparency across departments, all while saving HR time and resources.

How does Day Off help employees plan their vacations better?

Day Off allows employees to view their available leave balances, check team calendars, and submit requests instantly. This transparency helps them choose optimal dates and coordinate better with their teams.

Can the system handle different types of leave?

Yes. Day Off supports various leave types, such as vacation, sick leave, personal days, and holidays, with customizable rules for accrual, carryover, and eligibility.

How secure is employee data in Day Off?

Day Off prioritizes data security through encryption, secure cloud storage, and compliance with global data protection standards. Only authorized users can access sensitive information, ensuring privacy at all times.

Does Day Off integrate with other business tools?

Absolutely. Day Off integrates seamlessly with Google Calendar, Outlook, and Slack, keeping all leave information synchronized across the organization’s communication and scheduling platforms.

How does Day Off benefit HR managers specifically?

HR managers gain access to detailed analytics, automated reporting, and real-time visibility into absences and patterns. This helps them plan resources more effectively and make informed policy decisions.

What makes Day Off’s interface user-friendly?

The platform’s intuitive design minimizes clicks, offers clear visuals of leave balances and requests, and is easy to navigate across devices. Employees and managers can complete tasks quickly without training.

How can a vacation tracker improve company culture?

Transparent leave management fosters fairness and trust. When employees know their time off is respected and easy to manage, it strengthens morale, reduces burnout, and enhances loyalty.

Can the app handle global teams with different holidays?

Yes. Day Off allows administrators to define region-specific holidays, time zones, and working days, ensuring flexibility for distributed teams across multiple countries.

How does Day Off help with compliance and audits?

Every request, approval, and balance update is automatically logged, creating a digital audit trail. This simplifies compliance reporting and ensures accountability.

Is mobile access available for all users?

Yes, both managers and employees can use the mobile app to request or approve leave anytime, anywhere. Real-time push notifications keep everyone informed on the go.

What kind of support is available for new users?

Day Off provides extensive onboarding support, video tutorials, FAQs, and responsive customer service. The platform is designed to make setup and transition smooth for organizations of any size.

How does Day Off contribute to productivity?

By automating manual processes and reducing HR workload, Day Off allows teams to focus on meaningful work. Accurate planning ensures projects stay on track even when key staff are on leave.

Conclusion

Vacation tracker apps like Day Off represent a major leap forward in HR efficiency and employee empowerment. They combine ease of use with automation, analytics, and compliance, helping organizations simplify leave management while supporting a culture of well-being.

By offering features such as a user-friendly dashboard, calendar integration, customized policies, and mobile accessibility, Day Off streamlines processes for both HR teams and employees. The result is a transparent, fair, and stress-free system that benefits everyone, from executives to entry-level staff.

As workplaces continue to evolve, digital tools like Day Off will play a central role in creating more organized, flexible, and people-focused HR operations.